As you may have noticed from the links on the right, there are several ERDAS-oriented blogs out there. I've been experimenting with Yahoo Pipes - which has impressive functionality - allowing you to combine and manipulate multiple feeds. I embedded the feed results in a web-page here, so please feel free to check it out. It is also located in the links section on the right: e-planet.

Friday, May 30, 2008

Aggregated ERDAS Posts

Thursday, May 29, 2008

Mapping Accuracy

The focus of today’s post is the importance of mapping accuracy and how it relates to geospatial data. Personally I think accuracy is one of the most difficult and misunderstood aspects of our industry. How many people out there in the geospatial industry have used data (vectors, orthos, or other spatial data) where you have no definite information about the accuracy of the data? How often can people confidently state that their data conforms to an accuracy standard? Furthermore, how many people have detailed information on the lineage of their data? For example, if you have a “roads” vector layer – where did it come from? Was it collected from high-accuracy aerial photography (where you know the triangulation results) in a stereo feature extraction system? Or was it digitized in 2D from a scanned map of indeterminate origins? These are critical questions for applications that require accurate data for making business decisions. The importance of accuracy is also application-specific. For example, in the

For high-accuracy geospatial projects, customers (the organization purchasing the data) specify the accuracy requirements. Right now there are several standards for terrain, orthophoto, and mapping data. The USGS National Geospatial Program Standards are a good example. Other standards such as Federal Geospatial Data Committee standards can be found on the ASPRS Standards page. For a detailed look at accuracy standards, check out the Idaho Geospatial Committee's document for review on Aerial Mapping and Orthophoto Standards. It discusses accuracy in terms of the end-to-end photogrammetric workflow, from planning through final product generation.

I would also like to highlight Fugro EarthData’s recent Spring 2008 newsletter. One of the pieces, “The Call for a New Lidar Accuracy Reporting Framework”, features a discussion by industry experts Lewis Graham (GeoCue Corporation) and Karen Schuckman (

Monday, May 26, 2008

Sensor Spotlight: WorldView-1

WorldView-1 is a satellite sensor that was launched in September 2007. Built for DigitalGlobe by another Colorado-based company called Ball Aerospace & Technologies Corp, the satellite is in orbit at an altitude of 496 kilometers. There's a great gallery of the construction of the satellite here.

There is a specification sheet on the DG website that highlights the features and benefits of the sensor. WorldView-1 is a panchromatic sensor that features a 0.5 meters GSD at nadir and 0.59 meters at 25 degrees off-nadir. This makes it one of the highest resolution commercial remote sensing satellites on the market today. See a gallery of imagery here.

Imagery can be purchased at various levels of processing. These include:

Basic: the imagery is provided with a camera model and radiometric/internal geometry distortions are removed.

Standard: georeferenced imagery (but not orthorectified).

Orthorectified: 0.5 meter panchromatic orthos.

Basic Stereo Pair: the imagery has orientation information that allows for 3D content generation in a photogrammetric system.

These products give consumers a lot of freedom in determining the best solution for them. For example, if you want complete control over all the photogrammetric processing, then the Basic product will be the best choice. For GIS users that want an imagery backdrop for their application, the orthorectified product is a good option. For users that don't have any ground control (for triangulation) but still want to extract 3D information in a photogrammetric system, the Basic Stereo Pair is a good solution. This option is also good for remote areas where collecting ground control is difficult, expensive or dangerous.

We added support for WorldView-1 data in the LPS 9.2 release, which will support all the products mentioned above. Specifically, you could use LPS to triangulate the imagery, adjust the radiometry, extract and edit terrain, extract 3D features, create orthophotos, and create final image mosaics.

Lastly, for more information on satellite photogrammetry check out this article in the Earth Imaging Journal. It is written with IKONOS imagery as the example but is applicable for Worldview-1 data processing as well.

Thursday, May 22, 2008

Angkor Wat in 3D

Today I'd like to highlight a project from the ETH's Institute of Geodesy and Photogrammetry in Zurich, Switzerland.

The project, "Reality-based 3D modeling of the Angkorian temples using aerial images", was performed in 2006 and focused on the start to finish processing workflow for 3D modeling of the temple complex near Siam Reap, Cambodia. The project area was captured by a Leica RC20 camera in 1997 and provided to ETH by the Japan International Cooperation Agency. The project website proceeds to outline challenges (e.g. lack of ground control points within the project area) and processing methods (including LPS) employed during the creation of the 3D models of the temples.

The downloads section of the project page includes an excellent paper outlining the entire process.

If you haven't been to Angkor Wat, it is an amazing place. Here are a few photos I took there a couple of years ago:

Tuesday, May 20, 2008

More China Earthquake Imagery

Here's some good before and after satellite imagery of an earthquake area - although no mention of which satellite captured it...

Monday, May 19, 2008

LPS Terrain Editor: Terrain Editing Tip

For today's post I thought I would share a tip for a method of quickly editing TIN terrain datasets in LPS. In the LPS 9.2 Terrain Editor, we added a new "Terrain Following Cursor" mode. This was added as an icon in the Terrain Editing panel:

The terrain following cursor snaps the floating cursor to the height of the terrain for whatever XY location you move the cursor to. It operates in either an "on" or "off" mode, so you can easily roam around your imagery and the Z value (height) of the floating cursor will adjust automatically based on the terrain.

So why is this important?

Because it allows you to remove erroneous elevation spikes very quickly! When used in conjunction with the "Delete Tool" you can quickly identify points that are off the ground and delete them, or alternatively adjust them to their correct height. You can use the Terrain Following Cursor mode with any edit tool - here it is while using the delete tool:

Here's what the surface looks like after removing the post (a single-mouse click). This can be quite useful as a quality control tool - perhaps after completing area editing operations. After deleting posts, the floating cursor snaps back down to the surface. This allows you to quickly maneuver through a scene removing (or adjusting) TIN mass points. On a final note, you can even map Terrain Following Cursor mode as an event on your input device (e.g. middle-mouse button, or a Topomouse button).

Saturday, May 17, 2008

China Earthquake Aerial Photography

There has been extensive media coverage of the recent earthquake in China. This was a major event: a magnitude 7.9 quake that did some serious damage. I noticed yesterday that Newsweek released created an interactive page showing some aerial shots of the damage. The images of Yingxiu and Dujiangyan show how the areas around the epicenter were absolutely flattened.

Here is a link to the USGS details page on the event. The "Maps" tab has several types of maps available, including a KML file of recent earthquake activity (in Google Earth below). It will be interesting to see if other geospatial datasets are made available of the disaster area in time - I would think there is a lot of aerial reconnaissance going on...

Thursday, May 15, 2008

Signup for the IMAGINE Objective Beta Program

In case you missed the press release yesterday, the beta release of IMAGINE Objective has been announced. IMAGINE Objective is a tool for multi-scale image classification and feature extraction. I haven't had a chance to directly use the tool, but I've seen several demonstrations and the technology is very promising - I'd definitely encourage people to try it out. I think it can be particularly useful for update mapping applications (e.g. when an organization gets new orthos and needs to update buildings in a newly constructed subdivision that doesn't appear on their previous ortho coverage).

The beta sign-up page is here.

For more detailed info on IMAGINE Objective, check out the feature extraction solution paper. One thing to note: at this point the tool is focused on 2D feature extraction: it does not automatically extract 3D features. Unfortunately nobody has cracked the nut on automatic photogrammetric compilation! For now the 3D tools remain either manual or semi-automatic.

Monday, May 12, 2008

Historic Aerials: Visualizing Change Over Time

There's been a fair bit written on Historic Aerials already, but check out the new article in V1 Magazine. I've been a fan of Historic Aerials site for awhile now, and a few weeks ago we had a chance to interview them. A lot of the comments from the discussion are encapsulated in the article, and overall it was quite interesting to hear Jim and Brett talk about the trials and tribulations of working with historic photography. They are heavy LPS users (mainly for orthorectification and image processing) and have processed massive quantities of orthos while building content for the site.

It's easy to spend a lot of time on the site: if you want to see a good example of suburban Atlanta's development check out our ERDAS office location:

5050 Peachtree Corners Circle, Norcross GA. There's imagery from 1955, 1960, 1972, 1978, 1988, 1993, and 2007. When looking at the older images it becomes apparent that the entire area was developed over the past 20-30 years.

Make sure you check out the "Compare Two Years" option as well - it allows you to compare a second year to your currently loaded year and then adds a "swiping" capability to the viewer. Very cool!

Sunday, May 11, 2008

Dodging: An Art and Science

A couple of weeks ago I wrote a post covering an introduction to dodging. It outlines typical radiometric problems, introduced the concept of dodging, and then went into statistics generation.

Today I will focus on the actual dodging process. For the example data I will use some frame camera imagery covering Laguna Beach, California (from the LPS Example Data).

Here's a screen capture of the original undodged imagery:

It is apparent that the radiometry is uneven. In particular, the bottom right quarter of the image has a whitish hue. You can see that the hills should be the same color as the rest of the scene, but instead they are discolored and uneven throughout. In addition, there are several radiometrically inconsistent areas throughout the image. For example the urban area on the bottom-left looks completely washed out (lacking contrast), as is the area under development just above it.

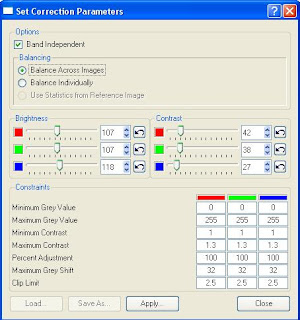

After generating statistics (discussed in the previous post), the next step involves adjusting the correction parameters. After statistics describing the imagery have been generated, the dodging algorithm applies a "target" output value for each pixel. For example, the input DN value of one specific pixel band may be 125. After statistics are generated (describing the image or a group of images), the dodging process identifies a target "adjusted" pixel DN value. Then, constraints are used so that extreme changes to the imagery are not applied. Here are the ImageEqualizer constraints and correction parameters for the Laguna image above:

- Band Independent: if checked, allows you to adjust bands independently. This is useful if your imagery is skewed in one band (e.g. your imagery has a "reddish" hue and you want to align the red band with the green and blue bands).

- The balancing options allow you to adjust the images individually or as a group. This is a useful option if you are adjusting images that have radiometric differences across the images. For example, if you have flight lines that were flown on different days with different lighting conditions you would definitely want to balance across images to even up the radiometry (if the final product is an orthomosaic).

- The brightness and contrasts sliders are relatively self-explanatory. You can adjust them for each band. The "undo" button will take you back to the default brightness/contrast value.

- The constraints section allows for control over how the dodging algorithm is applied to an image or group of images. It comes with pre-defined defaults (which you can see above). These parameters are critical for tweaking the image adjustments.

The original image is on the left and the dodged image is on the right. You can see that a lot of the problems in the original image have been resolved. However, by adjusting the parameters it can still be improved. The contrast is still fairly low, and the light area on the bottom right is still noticeable (although much less so).

The original image is on the left and the dodged image is on the right. You can see that a lot of the problems in the original image have been resolved. However, by adjusting the parameters it can still be improved. The contrast is still fairly low, and the light area on the bottom right is still noticeable (although much less so).In an attempt to improve it even more, I adjusted two of the constraint parameters. These were the "Maximum Contrast" and "Maximum Grey Shift" parameters - which are two highly critical constraints in any dodging process. The max contrast constraint has a default value of 1.3, which attempts to limit excessive contrast adjustment in low contrast areas. Increasing this value will bump up the limits on "target" contrast adjustments. Since the input imagery has some low-contrast areas, I bumped this constraint up to 1.8. Maximum Grey Shift applies a limit, by default 32, on how much a pixel's DN value can shift. For example, a specific pixel may have a DN value of 120 in one band. The dodging algorithm may determine that the "target" value should be 160 (e.g. it needs a pretty big adjustment). This constraint with the default 32 value will limit the final adjustment to 152. The reason for this is to limit extreme changes in the imagery. Since I could still see at least minor unevenness in the radiometry with the default constraints, I increased the max grey shift to 50.

Here is a screenshot previewing the original and dodged image (left) with my final dodging parameters (right).

In addition to changing the constraints outlined above, I also decreased the brightness a bit and bumped up the contrast (evenly across bands - I didn't adjust any single band more than another). This helped to reduce the washed out effect and produce an even scene.

In addition to changing the constraints outlined above, I also decreased the brightness a bit and bumped up the contrast (evenly across bands - I didn't adjust any single band more than another). This helped to reduce the washed out effect and produce an even scene.Here's a close up of the urban area with a part of it under construction. You can see how the dodged image on the right has much better results. Grassy areas are green, roofs look correct, and the construction site doesn't look so washed out.

I used ImageEqualizer for dodging this example image, but the same dodging algorithm is also embedded as a color correction option in ERDAS MosaicPro. Applying dodging in MosaicPro will apply the results to the output Mosaic and will not impact the input images. This can be handy for "two-stage" dodging. For difficult radiometry, it is sometimes necessary to dodge the imagery twice: once right after scanning and once during (or after) the photogrammetric processing (e.g. during or after mosiacking).

Thursday, May 8, 2008

Los Angeles Web Mapping Service

In Monday's post I talked about processing image and LIDAR data for rapid response mapping. Around the time time as the exercise in China Lake, Leica Geosystems also flew downtown Los Angeles. A colleague and I had the opportunity to process the image data from raw imagery through to a final orthophoto (orthomosaic) product, and then we had it served up as a WMS using IWS technology.

Here is the WMS link:

http://demo.ermapper.com/ecwp/ecw_wms.dll?la?

If you have a decent web connection it is fairly straightforward to fire it up in ERDAS IMAGINE using the same workflow I walked through here. If you're prompted for a resolution or GSD, you can use 0.127 meters (this may happen if you have 9.2 SP1 loaded). Here's the entire project area loaded up in a viewer:

Since the imagery was flown relatively recently (late summer '07, I believe it was around August), it is quite a bit more recent then imagery in Google Earth or Virtual Earth. Check out the area just north of the Staples Center:

Google Earth (credits to Sanborn):

Virtual Earth (credits to USGS):

RCD105 WMS:

It is interesting to see the sequenced development over time. Which brings me to an enhancement request I've seen several times out there: Google Earth and Virtual Earth should really think about including the "date of image capture" as a displayable layer (or some other means). Right now it seems like guesswork to find out when an area was actually collected. This is "kind of" in version 4.3 of Google Earth - the dates of some images are displayed in the status bar, but not all (I think only satellite image collection dates are displayed). Since Sanborn is largely an aerial provider, there are no dates on their downtown LA imagery.

One of the interesting things I've noticed about the LA datasets in both Google Earth and Virtual Earth is that both seems to take the "high brightness, high contrast" approach to radiometric processing. This is good for making a pretty, visually appealing image, but tends to blow out some image detail in "light" areas (e.g. white and light colored areas). Here's and example from Google Earth (also downtown LA):

You can see that the RCD105 imagery looks quite a bit "darker", as I didn't bump up the brightness/contrast too much. It was also hazy on the day of data collection, which can also cause issues. However, keeping the brightness and contrast down allows for image details to be picked up - although I'm thinking of making a "pretty picture" version as well. Shows that radiometric adjustment is very much an art as well as a science!!

A few notes on the processing: the final orthomosaic for the project area in the RCD105 WMS was about 4.5 gigs. We used ECW compression (10:1) in IMAGINE to compress it down to a 220 megabyte ECW file, which was the final image used for the WMS layer. While is a lossy compression format, there is little visual difference between the IMG and ECW files. If you really zoom in close, it is possible to see some compression artifacts - but I suppose this is the trade off between speed and quality. For general purposes is seems like a decent method for prepping up imagery.

Wednesday, May 7, 2008

New ERDAS YouTube Channel

We have just setup an ERDAS YouTube channel at: http://www.youtube.com/user/ERDASINC

This is a good way for us to walk through new features and workflows. Instead of learning about a feature as a bullet point on an email or brochure, you can see it live in action. Right now there are a couple of TITAN videos there, and we will be adding more. Feel free to check them out, and I'll post when I put any photogrammetry/mapping videos up!

Monday, May 5, 2008

LIDAR and Imagery Collection for Rapid Response Mapping

At our ASPRS UGM last Tuesday I presented a case study on rapid response mapping. The case study was an interesting application, so I thought I would share it here as well. The focus was on a joint ERDAS (software) and Leica Geosystems (hardware) exercise conducted last summer called "Empire Challenge 2007". This was joint military exercise for testing intelligence, surveillance and reconnaissance (ISR) concepts. The exercise was initially developed after technical issues were identified in sharing ISR information between allies in hotspots such as Afghanistan. I wasn't personally at the event, held near China Lake (California), but did get a chance to work with some of the data that was collected.

For the ERDAS/Leica team, the exercise involved flying a Cessna 210 mounted with both LIDAR and optical sensors over a project area and then creating final data products immediately after downloading the data. The team spent approximately three weeks on-site, and during this time they worked in three project areas and were able to fly, collect, and process a few thousand images and a massive quantity of LIDAR data.

The hardware consisted of an ALS50 (LIDAR), the soon-to-be-released RCD105 digital sensor, as well as Airborne GPS/IMU, a GPS Base Station, and some data processing workstations. While not officially released by Leica, the RCD105 was first "announced" at last years Photogrammetry Week in Stuttgart, Germany. More specifically it was discussed in this paper by Doug Flint and Juergen Dold. It is a 39 megapixel medium-format digital camera - which makes for a great solution when coupled with the ALS50 airborne LIDAR system. Here is an image of the RCD105: The software mix covered several areas. These included:

The software mix covered several areas. These included:

- Flight planning and collection software (FPES and FCMS)

- LIDAR processing (TerraScan)

- GPS and IMU processing (IPAS Pro, IPAS, CO, and GrafNav)

- Image processing (ERDAS IMAGINE)

- Photogrammetric Processing (LPS, ERDAS MosaicPro, ERDAS ImageEqualizer)

- Quality Control (ERDAS IMAGINE)

- A basemap collection flight. At 3048 meters, this was the highest altitude flight. The imagery GSD (Ground Sample Distance) was 0.3 meters. Data products included an orthomosaic, georeferenced NITF (National Imagery Transmission Format) stereo pairs, NITF orthos, a LIDAR point cloud, and a LIDAR DEM.

- A tactical mapping mission. This was a lower altitude flight (914 meters) collecting imagery at a GSD of 0.06. This was "tactical" as it covered specific project areas - as opposed to the broad swath of data collected from the higher altitude basemapping flight. Data products included an orthomosaic, geoferenced NITF steree pairs, NITF orthos, and a LIDAR point cloud and DEM.

- An IED corridor mission: basically covering a linear feature (a road). This was the lowest altitude and highest resolution flight (at 305 meters and 0.04 GSD), which produced NITF stereo pairs, NITF orthos, as well as a LIDAR point cloud and DEM.

And here's one of the images (in this case shown during point measurement - a part of the triangulation process - in LPS):

As you can see, the radiometry is tough! This is why ImageEqualizer had to be used to perform radiometric corrections.

As you can see, the radiometry is tough! This is why ImageEqualizer had to be used to perform radiometric corrections.Most of the workflow was relatively standard (mission planning, data collection, and the photogrammetric processing), but some of the final product preparation steps were pretty interesting. Since one of the main goals of the entire exercise was to produce intelligence products that could be shared with other groups, special consideration had to be given to exactly how the data would be formatted for delivery to the other Empire Challenge groups ingesting the data. Since the groups accepting the data could have been using any number of software packages, the ERDAS/Leica team had to steer clear of proprietary formats. However, one thing that many image processing and photogrammetry products usually have in common is the ability to ingest images with an associated RPC (Rational Polynomial Coefficient) model. Here is a good description of RPCs in GeoTIFF. Since this was a military exercise, the images (processed as tiffs) had RPCs generated in IMAGINE and then were exported to NITF. This made is possible to pass along the final data products to several groups without any data format/interoperability issues. One thing to note is that "RPC Generation" was introduced in the IMAGINE 9.1 release in early 2007.

By the end of the project the total processing times for the various missions could be measured in hours. The basemap mission took the longest (about three days for the entire end-to-end process), but it had approximately 900 images along with the LIDAR data.

Here's a screenshot of an orthomosaic over terrain. The radiometry hasn't been fully processed in this image, but it gives you an idea of what the project area was like:

Thursday, May 1, 2008

ASPRS Update and News

It has been a good week so far at this year's ASPRS conference in Portland. This was the first major show we've been to since the ERDAS name change, so we had a completely updated exhibition booth. Here's a snap of it:

The UGM on Tuesday was a good session as well. I spoke about a rapid response mapping project (basically a sensor fusion to data product generation exercise, which I will write about later) and also gave a short demonstration of LPS. There were some good discussions, particularly around the presentation/demo of the IMAGINE Objective feature extraction and classification tool.

In other news, Microsoft Photogrammetry (Vexcel) announced a new software system (called UltraMap) for processing UltraCamX imagery during today's "Photogrammetry Solutions" session. Michael Gruber gave an overview of the new system, which mainly covers the "upstream" part of the photogrammetric workflow (download, pyramid generation, QC, point measurement, etc). A couple interesting points about it that they support distributed processing for some functions (using internal Microsoft technology) and they are using Microsoft's Seadragon technology for image viewing. This is the same technology behind Photosynth, which is impressive.