Later today I'll be traveling to Portland for the annual ASPRS Conference. The theme of the conference, "Bridging the Horizons - New Frontiers in Geospatial Collaboration", got me thinking about the new (beta) Facebook application called Whereyougonnabe? from Peter Batty and co at Spatial Networking. Whereyougonnabe is a service that allows you to enter in the "where and when" of upcoming trips and activities. The application then lets you know which of your friends will be close to you, making it handy for all kinds of reasons. This sheds some light on the company name: spatial instead of social in "Spatial Networking". I had a chance to play around with it a bit recently and was particularly impressed with the Google Earth support. The application allows you to click on a link that generates a KML file that you can use to fire up Google Earth (and other KML-supporting apps) and view your (and your friend's) trip pathes. See the screenshot below for my trip to Portland:

Not only is your path displayed, but it also has a timeline control. It also generates an icon out of your profile picture, which is useful as well.

Back to ASPRS: I will write some updates throughout the week, but if you happen to be going think about stopping by our UGM tomorrow morning, or come by the booth anytime! We'll be giving out some new ERDAS t-shirts and will also be have a draw for an Iphone between 3 and 4pm on Wednesday.

Monday, April 28, 2008

Heading to Portland, Whereyougonnabe?

Saturday, April 26, 2008

Dodging: Intro and Statistics

One of the problems with creating seamless image mosaics or tiled orthophotos is "getting the radiometry right". What does that mean? Well, there are a lot of things that can turn a project sour when trying to make a good-looking final product. Some common radiometric issues are:

- Hotspots and vignetting: these are common in scanned aerial photography, where the sun's reflection causes a bright spots. Vignetting is where a darkening occurs towards the corners of the image. See here for a good vignetting description.

- Flightlines of imagery flown on different days or different times of the day. Some mosaicking jobs include images that were flown at either different times of the day, or on different days (with different weather conditions). This can be a difficult problem to deal with because the imagery of the adjoining flightlines may be completely different in terms of the sun angle, brightness level, cloud coverage, and so forth.

- Brightness problems. Another issue is when, usually due to on-board sensor settings, one color band's brightness level is either too high or too low. For example, the imagery looks "blue-ish" because the blue band brightness level is skewed. Panchromatic imagery may also be too dark or light and need adjustment. It is also possible for there to be brightness variation within on image.

For aerial photography, one tool that can be used to alleviate the above problems is a process known as dodging. While there may be other problems, such as atmospheric issues associated with satellite imagery, these may require the use of other tools.

So how can dodging help?

Dodging basically attempts to create a uniform spectral intensity within and across images. Dodging, in the traditional photography sense of the word, is about reducing the exposure in a portion of the image in order to make that portion appear lighter. In photogrammetric processing this completely digital process. There are numerous programs available to perform dodging, and they often share techniques.

From ERDAS we offer dodging capabilities in a few different applications, namely Leica MosaicPro and ImageEqualizer. Both of these applications use a dodging algorithm originally developed by LH Systems for use with the DSW scanner line (implemented in a program called Fastdodge).

In these applications, the processes for statistics generation are similar:

A set of statistics are calculated (e.g. mean, standard deviation), which are based on certain user-defined parameters. These include grid size, skip percentage, and the minification layer (aka pyramid layer) for the process to run on. Of these, both the grid size and minification layer can be quite critical for success.

- Grid size defines the number of grid tiles in x and y for statistics to run on. For example, a grid size of 10 will result in 100 grid tiles (10 in x, 10 in y). Depending on the scene, this can have a big affect. For example, frames with relatively contiguous radiometry can use small grid sizes. "Complex" scenes, such as a frame containing water, urban, and rural/vegatated areas, usually require a higher grid size/density to prevent one radiometrically contiguous area from affecting another area. For example, consider a patch of dark green vegetation surrounded by say, lighter colored grain crops. If the grid size is significantly larger than the dark green patch, the output (depending on the constraints applied) will wash out the dark green because of the statistical influence of the DN values of the lighter colored grains. However, sometimes the problem outlined in this example can be impossible to avoid! This is why dodging is both an science and an art....

- "Skip percentage" is generally referred to as an "edge trimmer" (e.g. the percentage of the image edge that will be ignored during stats generation). It is important not to confuse this with "skip factor", which generally refers to a certain factor of pixels to "skip" when calculating stats. Using a high skip factor can have the same effect as using a lower-resolution pyramid layer.

Wednesday, April 23, 2008

Sensor Spotlight: the RC30

Since I covered a satellite sensor in the last sensor spotlight, I thought I would go for a airborne sensor this time. The focus of today's post is the RC30, which has been the workhorse of the airborne mapping community for many years. Introduced in 1992 by Leica Geosystems, over 400 cameras have been deployed all over the world.

About the sensor: the RC30 is a frame camera system that can capture imagery in color, panchromatic, and false color film. There are a couple of different lens options (6 and 12 inch focal lengths), which allow for large-scale mapping applications. Complete specifications are available here.

For an example of what RC30 imagery looks like, check out the orthophotos available online at MassGIS. These were flown by Keystone Aerial Surveys, who also happen to have a great photo of the camera on their site here. Other components in the system may include a GPS/IMU system, a PAV30 gyro-stabilized mount, a GPS reference station, and more.

In LPS, the workflow for the RC30 is the classical frame photogrammetry workflow - it is basically the workflow outlined here.

Lastly, just to demonstrate the longevity of this sytem, check out this advertisement from 1988 for an RC20 (Wild Heerbrugg was an earlier incarnation of Leica Geosystems). The RC20 was the predecessor to the RC30, and is essentially the same except for the addition of gyro-stabilized suspension on the RC30. Aside from that, I just think it is a very cool ad!!!

Triangulated Irregular Network (TIN) Formats and Terrain Processing

One issue in the mapping community is that there is no standard format for Triangulated Irregular Network (TIN) terrain files. Most geospatial applications use proprietary formats, which presents serious interchange problems when moving the data around (e.g. from clients to customers, or even within an organization). Unfortunately we are guilty of this as well in LPS, with our LTF TIN format. Many people get around the limitations of proprietary formats by using ASCII as a common interchange format, where the TIN mass points are listed in XYZ for each row (separated by commas, tabs, or whitespace). Some systems also support the notion of points codes for breaklines, which are a critical part of the TIN structure. The main problem with ASCII is that once you get over several million points, the file can be cumbersome to deal with - which means it may be necessary to divide up the data into tiles. Alternatively you can convert the TIN to a raster format, but this can be undesirable if you have dense mass or unevenly distributed points, or if you have breaklines in the TIN (since rasters don't support breaklines).

A case in point is the LIDAR data from the Washington State Geospatial Data Archive. While there are standards for LIDAR data, most commercial applications do not (yet) natively support it. Hence there is a need to make the data available in alternate formats. The data is available in ASCII and TIN format for each quarter quad, but the TIN format is in .e00 format, which is an old interchange format developed by ESRI. Hence, the site recommends Importing the .e00 files into ArcMap in order to use them. The only issue with that is that you need ArcMap... This is likely a reason for including XYZ ASCII as an option as well. Another option is a 3 meter DEM for the entire coverage area, which is possible to download from here. It is in Arc/Info binary grid format though...

So how do we handle all of this in LPS?

There are a few different options here, and the LPS and IMAGINE groups have been working on a solution. Prior to the LPS/IMAGINE 9.2 release, users had to either use the 3D Surface Tool in IMAGINE or the terrain Split and Merge tool in LPS Core. In 9.2, and moving forward in future releases, we are consolidating our efforts by extending the LPS Split and Merge tool and making it available in IMAGINE, which we have renamed to the "Terrain Prep Tool". It is available in Data Prep > Create Surface in IMAGINE, or from Tools > Terrain Prep Tool in the LPS Project Manager.

The Terrain Prep Tool adds new split/merge functionality inherited from LPS as well as resolving some longstanding limitations associated with the 3D Surface Tool (e.g. it dramatically increases the number of points that can be handled). For 9.2 we also added a few formats such as LAS and two flavors of ASCII (with and without point codes for breaklines). The 3D Surface Tool will likely remain available for a few more versions, until we have fully replaced it's functionality in the Terrain Prep Tool.

Friday, April 18, 2008

3D City Building Case Study

While high-fidelity 3D city models have been around for 10+ years, they have been getting a lot of attention in recent years with the advent of Google Earth and Virtual Earth. I've touched a little bit on 3D building extraction and texturing in a previous post, but recently came across an excellent case study from Magnasoft entitled "Build Virtual Cities to Plan Real Cities".

The case study provides an overview of start to finish textured city construction. The main input data consists of stereo pairs, and the software involved includes LPS, MicroStation, and ArcGIS. The final products go well beyond just buildings: they also include roads, tree canopies, water bodies, electric poles, walls, and fences. You can see from the stereo feature compilation screen shot (on part 5) that complex building structures can be modeled in high detail.

Just to explain the process in a bit more detail: MicroStation is important because LPS' main 3D feature extraction application, PRO600, is an MDL application that hooks in the LPS stereo viewer and allows users to extract 3D objects in stereo within the MicroStation environment. After the building intelligence is added in ArcGIS, the models are brought into Stereo Analyst for IMAGINE (another 3D extraction tool, which is an add-on to ERDAS IMAGINE), where a feature called the Texel Mapper can be used to automatically texture the buildings from the "block" of aerial photographs. I'll expand more on this process in a future post...

Wednesday, April 16, 2008

Service Pack 1 for LPS and ERDAS IMAGINE 9.2 Now Available!

The new service pack for LPS and IMAGINE is now available for download on the ERDAS Support Site. Instead of releasing separate service packs for LPS and ERDAS IMAGINE, we decided to release a single consolidated SP that covers both products, which should make life easier for users.

While the SP mainly consists of fixes, we have some new features in LPS that I wanted to highlight as well. One of the main new features is the Post Editor in the Terrain Editor. This tool (see the screenshot below) is for gridded terrain data, and allows you to quickly jump from post to post, adjusting the Z value as you go. The navigation is also device-mappable, so you can use keyboard shortcuts or buttons on an input device (e.g. a Topomouse).

Here is a complete list of the fixes and enhancements:

LPS

· Automatic Terrain Extraction (ATE):

o Added note to online help for Adaptive ATE explaining that image datasets vary and you must check your results and make selections (both the method and the ATE parameters) based on your particular datasets. [LPS-1626]

o Adaptive ATE correctly generates DTMs using ADS40 images and does not show the error message "failed to transform points". [LPS-1531]

o Resolved a "Bad allocation" memory error when using ADS40 images to generate a DTM in .img format. [LPS-1540]

o Added note to OLH advising user about using pyramid levels and effective building filtering. [LPS-1582]

o Adaptive ATE now measures points over the complete stereo model area; not just 70 to 80% (center part). [LPS-1482]

o Fixed DTM extraction "out of space" error so that the user can cancel the process. [LPS-1548]

o Fixed an ATE issue associated with the "individual files" output setting. [LPS-1313]

o Added support for all sensors in Adaptive ATE (not just frame cameras and ADS sensors). [LPS-1562]

· Block Triangulation:

o Synchronized units of measure for GCPs and residuals in Refinement Report. [LPS-1267]

· Documentation:

o Added tip to Mosaic OLH explaining how to correctly lay out ADS L2 images. [LPS-231]

o Enhanced content for "Set Constant Z" in Terrain Editor OLH. [LPS-1341]

o Updated OLH hyperlink in Camera File Info. [LPS-1508]

o Improved the content of the View Manager OLH. [LPS-1465]

· Terrain Prep:

o Eliminated write permission errors when opening LTF files. [LPS-1187]

· Frame Editor:

o Eliminated LPS blockfile issues when editing the model parameters in Frame Editor for the WorldView Orbital Pushbroom and SPOT5 models. [LPS-1545]

o "Cancel" option cancels current image in the Frame Editor and not the selected image in the LPS project manager cellarray. [LPS-1577]

· Interior Orientation:

o Changing the camera selection for a block file (after performing IO with the wrong camera) no longer prevents subsequent IO with the same block file and the new camera selection. [LPS-1283]

· LPS General:

o Synchronized units of measure for the Average Flying Height (Frame Camera) and Average Elevation (Orbital Pushbroom) in Block Property Setup with the units in the block file. [LPS-1575]

o New Feature: Stereo Analyst - "Extend Features to Ground", which uses a 3D Polygon Shapefile and extends the segments of each polygon (as faces) to the ground to form solid features (e.g. Buildings). [LPS-1567]

o The Average Elevation, Minimum Elevation and Maximum Elevation units in RPC Model projects are now displayed in the project vertical units in the Frame Editor [LPS-1597]

o Eliminated write permission errors when opening LTF files. (See LPS-1187) [LPS-1492]

o Added QuickBird/WorldView NCDRD format support to online help. [LPS-1610]

o Added support for the Latvian Gravimetric Geoid (LGG98) and Latvian Coordinate System (LKS-92) [LPS-1557]

o Added support for the LHN95 geoid (

· MosaicPro:

o Fixed MosaicPro occasional issues when running Image Dodging with default settings on tiled TIFF images. [LPS-1554]

o Improved performance for seam polygon generation with "most nadir", "geometry", and "weighted" options. [LPS-1552]

o Fixed occasional display issue when one or more images have been mosaicked but do not display. [LPS-1578]

o Fixed MosaicPro occasional issues when working with pyramid layers (Image Dodging and Mosaicking). [LPS-1553]

o Resolved an issue associated with clipping Shapefiles that do not contain projection information. [LPS-585]

· Orthoresampling Process:

o Resolved an issue associated with orthophoto generation when using ATE-derived LTF files in LSR coordinate systems. [LPS-1579]

· Import/Export:

o Enhanced Importer for ISAT projects with multiple flight lines. [LPS-1550]

· Sensor Models:

o In special cases where the RPC does not have a change in the Z value, Triangulation failed with an error of "error with computing default ground delta". These special cases now triangulate correctly. [LPS-1510]

o Added support for NITF NCDRD format in orbital pushbroom QuickBird/WorldView model. [LPS-1551]

· Terrain Editor:

o New Feature: Post Editor (Terrain Editor) - allows a user to quickly move through points and adjust the Z value for selected points in grid terrain files. [LPS-484]

o Enhanced jpeg image display quality in Terrain Editor. [LPS-1547]

Leica Stereo Analyst

· Stereo Analyst:

o Extend Features to Ground will convert flat 3D polygons into solid shapes by extruding each polygon to the ground. Ground elevation may be defined by Shape Attributes, Terrain Dataset or a constant height. Output may be directed to a Multipatch Shape File or 3DS file. [SAI-136]

ERDAS IMAGINE

· Classification:

o Added subpixel demo example files which were missing in ERDAS IMAGINE 9.2 [IMG-3326]

· Data Exchange:

o Improved MrSID MG2 and MG3 map projection support by allowing the population of internal WKT strings and writing external PRJ files. [IMG-3148]

o Improved MrSID reading support by implementing reading of MrSID World Files and ESRI Projection Files. [IMG-1107]

o Improved portability of 1-bit TIFF images for use with software products outside of ERDAS IMAGINE. [IMG-1709]

o Corrected error in MrSID file creation that caused problems in Microstation sofware. [IMG-1342]

o A correction for the stagger phenomenon was implemented for AVNIR-2 level 1A/1B1 data. [IMG-3019]

o Expanded ECWs supported projection and map units. [IMG-2540]

o Enhanced Oracle GeoRaster compatibility. [IMG-3229]

· Data Prep:

o Added support for Imagizer Data Prep for Windows Vista. Requires the customer to install the Windows Vista Support Update in conjunction with this fix to take advantage of this feature. [IMG-3029]

o Added non-linear surface creation option to Terrain Prep Tool. [IMG-3286]

o Improved performance of 2D shapefiles with attribute defining elevation in Terrain Prep Tool. [IMG-3313]

· Geospatial Light Table (GLT):

o Improved GLT scale display accuracy, when rotating imagery with rectangular pixels. [IMG-2324]

· ERDAS IMAGINE General:

o Updated the setting of the IREP NITF flag when chipping DTED so they would display correctly. [IMG-2311]

o Enhanced toolkit API: CloseMeasure("nosave") will no longer ask to save the measurements, CloseMeasure() will ask to save measurements. [IMG-2885]

o Add support for

o Improved reliability of Toolkit callback functions [IMG-3284]

o Added support for WorldView RPC in Warptool & AutoSync [IMG-3369]

· Interpreter:

o Introduced a new resolution merge capability, the Subtractive Resolution Merge. [IMG-3295]

· NITF Import/Export:

o Fixes a problem creating JPEG 2000 compressed NITF files that were smaller then 1024x1024 pixels. [IMG-3366]

· Vector:

o Added "Use White Textbox" option to Attribute to Annotation tool. [IMG-3218]

· Viewer:

o Removed the Render outside of View preference in the Viewer Preferences. This feature is no longer needed with today's viewing technology and when on slows down viewing speed. [IMG-3252]

o Corrected the detection of pyramid layers for NITF images with external pyramid layers in support of Dynamic Range Adjustment (DRA). [IMG-2864]

o Fixed IEE functionality. [IMG-3044]

ERDAS IMAGINE Add-ons

· AutoSync:

o Improved the AutoSync Resample Setting Clip to Reference Image Boundary option to more closely clip to the reference image boundary. [IMA-483]

· Radar Mapping Suite:

o Improved support for complex TerraSAR-X (type SSC) data. [IMA-539]

Saturday, April 12, 2008

Sensor Spotlight: ALOS Prism

While there is a lot of interest in "high resolution satellite/airborne data" out there in the blogosphere, I haven't seen much discussion on the merits of individual sensors. Hence, I thought it would be interesting to focus a post now and then on individual satellite and airborne sensors.

One satellite sensor that has been getting a lot of attention lately is ALOS PRISM (Panchromatic Remote-sensing Instrument for Stereo Mapping). ALOS was launched on January 24th, 2006 from the Tanegashima Space Center in Japan. While the focus of this post is on the PRISM sensor, the satellite also hosts two other on-board sensors: the AVNIR-2 and PALSAR sensors.

The unique aspect of the PRISM sensor is that it has it is a pushbroom sensor, with three optical systems for capturing forward, nadir, and backward imagery. At nadir the spatial resolution is 2.5 meters. The complete specs are here. For stereo photogrammetry applications, the key factoid about PRISM is that it collects stereo imagery, so it is possible to extract 3D terrain and feature information.

RESTEC, the Remote Sensing Technology Center of Japan, also has a wealth of information on ALOS and ALOS PRISM. In addition to all the background information, it also has some useful sample data. Here is a sample PRISM image. RESTEC has also developed an ALOS Viewer application that can be used to open images from the various ALOS sensors and perform basic operations like measuring distance and, in the case of stereo PRISM data, manually measuring building height.

Lastly, one thing to note is the the ALOS PRISM rigorous sensor model is supported in LPS 9.2. If this is a data type you need, please look into getting your hands on the new version!

Accessing GeoBase Web Services

GeoBase, the Canadian government portal for free geospatial data, has made several GeoBase layers available as OGC-compliant WMS'. It is also worth noting that the data is free from most restrictions, as stated in the license agreement.

One of the new features in ERDAS IMAGINE 9.2 is the GeoServices Explorer. It is a new way to access web mapping services from desktop versions of IMAGINE and LPS.

Once you login to GeoBase, accessing their WMS layers is pretty straightforward. They send you the WMS service URL in an email, and that's all there is to it... In IMAGINE it is fairly simple to connect - just open a viewer, hit the "open layer" icon, choose a Web Mapping Service in the file type, and then hit the "Connect" button on the bottom right hand side of the interface.

After you hit the "Connect" button the GeoServices Explorer will open (see below). This will list all the WMS layers you can connect to once you have added the GeoBase services. There is a huge wealth of information available in GeoBase. The only thing I couldn't find that I had been hoping for was the SPOT 4/5 data that was made available for download earlier this year. I was able to download the SPOT data but unfortunately just didn't see it as an available layer to connect to...

In the example below I connected to the Canada-wide AVHRR composite - which was a breeze to connect to and display. It was also easy to display other layers such as roads and cities. They also have several layers outside Canada, covering the US, parts of Africa, and a few global datasets. I've included a screen capture of the IMAGINE viewer with the AVHRR data loaded in it.

Friday, April 11, 2008

Annual ASPRS Conference

As you may know, the annual ASPRS conference is going to be held in Portland this year, from April 28 until May 2nd. For the first time in several years, we'll be holding a user group meeting at the event on Tuesday morning (April 29), starting at 8am in room A106 of the Oregon Convention Center.

For the UGM, there is an agenda that you can check out online. I'll be focusing on a rapid response project we were involved with last year as well as a discussion on our production mapping technology. There's no sign-up or reservations necessary (or cost - you don't even have to be a conference attendee) to attend the UGM, so please feel free to drop by.

Thursday, April 10, 2008

ERDAS: Back to the Future (Revisited)

As you may have read in the media or my previous post, Leica Geosystems Geospatial Imaging has been rebranded as ERDAS. Check out the new marketing campaign:

Kidding! This is an old ERDAS ad from a 1986 edition of PE&RS. Some prime marketing material: "High Resolution 512 x 512 x32 bit true color display", "9-Track Tapes Handling", and more...

Speaking of PE&RS, I'll have some information on the ASPRS conference in Portland tomorrow. Stay tuned...

Tuesday, April 8, 2008

Sensor to GIS: An Example Workflow (Part 2)

In the previous post I outlined the process for ingesting raw imagery into a photogrammetric system and creating GIS data products: orthophotos, terrain, and building feature data. While I skipped what could be consider the very start of the workflow (e.g. flight planning, data download, etc) the idea was to demonstrate the major steps involved in creating GIS-ready vector and raster data.

In today's post I'll walk through loading and using the data in Quantum GIS. After downloading the software, installation was pretty straightforward. The only real software setup operation I did before getting started with the workflow below was to install the GRASS plugin. This was fairly easy: you just select the Plugin Manager from the Plugin drop-down menu, select the GRASS plugin, hit OK and the plugin gets installed right away.

Since GIS workflows aren't nearly as linear as the photogrammetric processing was, I'm just going to list bullets of operations I went through:

- Load the terrain data. I added my terrain layer (which is a GDAL supported .img IMAGINE raster terrain file) with the Add Raster button. This loads the raster and displays the layer name in the legend on the left hand side of the application. By right-clicking on the layer, I could access the raster layer properties. From there it is possible to change things like the Symbology (check out the stylish "Freak Out" color map below), add scale dependent visibility, view metadata and a histogram.

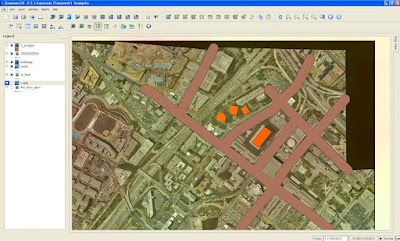

- Load the orthophoto. The methodology for adding the ortho was the exact same as the terrain since they are both raster layers. In the screen capture below I've resized the main viewer, changed the DEM color map to pseudo-color and adjusted the DEM transparency. This gives me a better idea of elevation over the various parts of the ortho: red is higher elevation while the yellow and greenish-blue parts in the bottom part of the ortho are lower elevation. If the layer is selected I can spot check specific elevation values with the "identify feature" tool in the default toolbar.

- Load the building vectors. The button for adding a vector layer is right beside the button for adding rasters on the main icon panel. Since I had saved my buildings as a shapefile, the process for adding them into the project was simple. They are displayed in the screen capture below, after changing the polygon color. Yes, I was too lazy to extract more than four buildings....

- Create a Roads layer. The next thing I did was to create a new shapefile a digitize a few roads. There are actually two ways to do this: you can use either the native QGIS vector creation and editing tools, or you can use the GRASS plugin. In the screen capture below I used the native QGIS tools, but after experimenting for a bit I like the GRASS editing tools better - for example, you just need to right click to finalize an edit procedure, whereas in QGIS you need to right click, hit an OK button on the attribution panel, and then wait a second or so for it to render. I also like how the GRASS editing tools are in a single editing panel. This makes it easy to switch between tools, perform attribution, and makes for easier editing (e.g. moving vertices) on existing features.

- Buffering Roads. After digitizing the roads layer I opened up the Grass Tools, which are available from the GRASS toolset:

While this is a very simple exercise, what I am trying to illustrate is that by the end of the workflow I was performing pure GIS functions based on vector data. These are "analysis" operations that can be performed independently of the sensor data that was used to create the base map data layers. At this point the imagery may not even be relevant in a real world project - even though sensor data processed in a softcopy photogrammetry environment provided the original source data.

While this is a very simple exercise, what I am trying to illustrate is that by the end of the workflow I was performing pure GIS functions based on vector data. These are "analysis" operations that can be performed independently of the sensor data that was used to create the base map data layers. At this point the imagery may not even be relevant in a real world project - even though sensor data processed in a softcopy photogrammetry environment provided the original source data.The food for thought here is that knowledge of the base "input" data to a GIS is critical: decisions you make based on your GIS analyses depend on it. Accuracy issues with the input data (sensor issues or photogrammetric processing errors) can influence the validity of the entire downstream project.

Sunday, April 6, 2008

Sensor to GIS: An Example Workflow (Part 1)

I mentioned in the previous post that photogrammetry is often the relatively unknown link between sensors and GIS. Today I will walk through prepping up sensor data, creating data products and then using them in a GIS.

First of all, a list of the ingredients:

- A softcopy photogrammetry system: in this case it is LPS running on my laptop. The various modules required are Core, ATE (automatic terrain extraction), the Terrain Editor, and Stereo Analyst for IMAGINE. I'll describe what each of these are used for as I go through the workflow.

- A GIS: I thought I would give Quantum GIS a whirl. I've been playing around with it lately and it has some interesting functionality. In particular, some pluses are that it is open source, uses GDAL for raster support, and I like how it has a GRASS plugin providing GRASS access within the QGIS environment. This is handy for 2D vector feature extraction and editing, as well as performing some analysis functions with the GRASS geoprocessing modules.

- Input data: for this example I'm going to use some aerial digital frame photography over Los Angeles, coupled with GPS/IMU data. More details on the source data in a future post...

- Setup the "block" in LPS. This is basically where you define the project. The most important part here is to get the projection system correct. This isn't fun to fix up if you've found out it is wrong later on...

- Setup the camera file. This provides information about the camera, it's focal length, principal point, radial lens distortion parameters, and more.

- Add the images. At this point the images are basically raw and have no orientation or georeferencing information associated with them.

- Import the orientation data. Since the sensor was flown with a GPS/IMU system on-board, then I needed to import the orientation parameters. These will provide us with X, Y, Z, Omega, Phi, Kappa orientation parameters for each image. This provides initial orientation data that will can further refine. You can see the layout of the block below.

- Run Automatic Point Measurement. This generates "tie points" via image matching technology that can be used to refine the initial orientation parameters in the next step. Tie points are precise locations that can be identified in two or more overlapping images (the more the better). Since I started with good orientation data, measuring true ground control points isn't necessarily required (providing you have high-grade GPS/IMU hardware and didn't run into any problems (e.g. while computing IMU misalignment angles)). I don't actually have ground control for this dataset but if I did, I would measure the GCPs prior to running APM. The screenshot below shows the dense array of tie points.

- Run the Bundle Adjustment. This reconstructs the geometry of the block and provides XYZ locations for the measured points from the previous step. Blunders (mis-measured points) from the previous step may have to be removed to achieve a good result. After the adjustment succeeds with a good RMSE, the results can be checked via a report file and the stereo pairs visually inspected in stereo. Y-parallax in stereo would indicate a problem with the adjustment. The screenshot below shows the Staples Center in anaglyph (no stereo on my laptop), where the 3D cursor is positioned in XYZ right on top of the building. Since the top of the building is 150 feet off the ground, you can see the "anaglyph effects" on the ground around the building.

At this point, I now have triangulated images and can start creating data products: terrain, orthophotos, and 3D features.

- Generate Terrain. For orthophoto generation, I need a "bare-earth" terrain model. Normally terrain would be generated by running Automatic Terrain Extraction, which runs an autocorrelation algorithm to generate terrain points. However, since there are so many skyscapers in the block that it made more sense for this example to simply use the APM points and filter out tie points that had been correlated on buildings. Normally this wouldn't cut it and I would need either LIDAR, autocorrelated, or compiled terrain, but for the sake of expediency the APM-derived surface model should be fine.

- Generate Orthophotos. Orthophotos are the #1 data product produced by photogrammetric processing. Most commercial applications have orthorectification capability, and the user interface for LPS' orthorectification tool is below. After orthorectification, there may be the need to produce a final mosaic, or a tiled ortho output. Here is the orthorectification user interface.

And voila: here is an ortho, in this case I also mosaicked a few of the images together:

- 3D Feature Extraction. For the example workflow I extracted a few 3D buildings in Stereo Analyst for IMAGINE, which is an ERDAS IMAGINE and LPS add-on module. That said, there are a number of commercial software packages that accomplish a similar function. The nice thing about Stereo Analyst is that it doesn't require a 3rd party CAD/GIS package to run on top of, and you can extract 3D shapefiles, texture, and also export to KML. Here's a screen capture of one of the (very few) buildings I extracted. Again I'm working with anaglyph as I am on my laptop.

Between Sensors and GIS Content

One of the things that has always struck me as unusual is that there does not seem to be a lot of insight into the role of photogrammetry in the broader geospatial community. For example, google “GIS data” and you’ll get a lot of hits on various GIS data sources, including street maps, census data, building footprints, cadastral data, and so forth. However, it is important to keep in mind that this data typically is not first generation, but rather a derived product. Often the data lineage is not tracked (or at least not tracked back directly to the sensor), so users making business decisions based on the data do not have insight into how the data was developed, potential problems with the data, the true accuracy, and other issues…

At any rate, the point of this post is that photogrammetric processing provides the link between satellite/airborne sensors and GIS data. How and why? This is because geospatial data frequently derived from some sort of sensor, be it airborne imagery, satellite, LIDAR, GPS, or any other of the measurement sensor technology. This is how we get the “Geographic” part of GIS. All that great orthorectified imagery you see as a layer in a commercial GIS or in Google Earth, Microsoft Virtual Earth, and ERDAS TITAN typically goes through some sort of photogrammetric processing. The Virtual Earth 3D blog has a good post on how UltraCamX sensor data is processed for delivery in Virtual Earth. The post doesn't contain the technical details of exactly what sort of processing is applied, as the exact nuts and bolts of the workflow is likely a Microsoft/Vexcel trade secret at the moment. The general workflow is fairly well-known though, as photogrammetric processes can produce all sorts of primary base map and other data (mainly orthos, terrain, and 3D feature data) as input into a spinning globe app or a GIS...

Stay tuned for the next post and I will walk through an "photogrammetry to GIS" technical example...

Saturday, April 5, 2008

Article Update: Photogrammetry Workflows, Present and Future

In this post I thought I would update an article I wrote last year that provides an intro to photogrammetric workflows and some thoughts on the latest technology. Originally published last May in GIS Development, this version has updated content and I've also added in links to further information on the various topics discussed throughout the article.

Hope you enjoy!

Introduction

The photogrammetric workflow has been relatively static since the advent of digital photogrammetry. Numerous application tools are dedicated to various parts of the workflow but the actual photogrammetric tasks have seen little change in recent years. However, we are beginning to see changes in the workflows. The growing proliferation of “new” technologies such a LIDAR, pushbroom, and satellite sensors has caused many commercial vendors to re-examine the application tools they offer. In addition, advances in information technology have opened up the possibility to processing increasingly large quantities of data. This, coupled with improved processing capabilities and network bandwidth, are also causing a change in traditional photogrammetric workflows.

Background

ERDAS has a long history in providing both analytical and digital photogrammetry solutions. As a Hexagon company, ERDAS’ mapping legacy dates back to the 1920’s with the founding of Kern Aarau and Wild Heerbrugg. These companies were consolidated into Leica and over the years offered analogue, analytical, and digital photogrammetry and mapping solutions. LH Systems, ERDAS, and Azimuth Corp. were acquired by Leica Geosystems in 2001. These acquisitions allowed Leica to enter a number of spaces in the digital photogrammetry market and offer comprehensive photogrammetric solutions to the production photogrammetry, defense, and GIS markets.

ERDAS’ initial photogrammetric offerings, Orthobase and Stereo Analyst for IMAGINE, were targeted at the GIS user community. As demand for 3D data grew in the GIS community, Leica Geosystems sought to provide easy to use tools for producing “oriented” images from airborne or satellite data and extracting 3D information such as building and road data. With the acquisition of LH Systems in 2001, Leica Geosystems inherited a staff and customer base skilled in production photogrammetry. This new customer base required engineering-level accuracy and primarily worked with large-scale airborne photography in the commercial arena and satellite imagery in the defense market. In early 2004 Leica Geosystems released the Leica Photogrammetry Suite (now LPS). This new product suite initially used updated components from OrthoPase and OrthoBase Pro, and developed new technology for stereo viewing and terrain editing. Shortly thereafter mature products such as PRO600 and ORIMA were integrated into the product suite and numerous update releases increased productivity. In April 2008, Leica Geosystems Geospatial Imaging division was re-branded as ERDAS.

Current Workflows

When asked about the “photogrammetric workflow” most industry professionals will refer to the analog frame camera (e.g. RC30) workflow. Analog frame cameras were prevalent during the transition to digital photogrammetry and still remain a common source of imagery. Numerous software tools have been developed to guide users through the traditional analog frame workflow. Popular vendors include BAE, INPHO (now owned by Trimble), Intergraph, and ERDAS. A brief outline of the mainstream analog frame workflow is provided below.

· Scanning process: Airborne camera film is scanned and converted into a digital file format. Some high performance scanners perform interior orientation (IO) as well.

· Image Dodging: Scanning may introduce radiometric problems such as hotspots (bright areas) and vignetting (dark corners). These can be minimized or reduced by applying a dodging algorithm. Dodging, in the digital photogrammetry sense of the word, generally calculates a set of input statistics describing the radiometry of a group of images. Then, based on user preferences, it generates target output values for every input pixel. Output image pixels are then shifted based on several user parameters and constraints from their current DN value to their target DN. Typically there are options for global statistics calculations for a group of images, which has the net effect of balancing out large radiometric differences between images. Overall this has the effect of resolving the aforementioned problems and “evening out” the radiometry both within individual images and across groups of imagery.

· Project setup: most photogrammetric packages have an initial step where the operator performs steps such as defining a coordinate system for the project, adding images to the project, and providing the photogrammetric system with general information regarding the project. Ancillary information may include data such as flying height, sensor type, the rotation system, and photo direction.

· Camera Information: the operator needs to provide information about the type of camera used in the project. Typically the camera information is stored in an external “camera file” and may be used many times after it is initially defined. It contains information such as focal length, principal point offset, fiducial mark information, and radial lens distortion. Camera file information is typically gathered from the camera calibration report associated with a specific camera.

· Interior Orientation (IO): The interior orientation process relates film coordinates to the image pixel coordinate system of the scanned image. IO can often be performed as an automatic process if it was not performed during the scanning process.

· Aerial Triangulation (AT): The AT process serves to orient images in the project to both one another and a ground coordinate system. The goal is to solve the orientation parameters (X, Y, Z, omega, phi, kappa) for each image. True ground coordinates for each measured point will also be established. The AT process can be the most time-consuming and critical component of the digital photogrammetry workflow. Sub-components of the AT process include:

o Measuring ground control points (typically surveyed points).

o Establishing an initial approximation of the orientation parameters (rough orientation).

o Measuring tie points. This is often an automatic procedure in digital photogrammetry systems.

o Performing the bundle adjustment.

o Refining the solution: this involves removing or re-measuring inaccurate points until the solution is within an acceptable error tolerance. Most commercial software packages contain an error reporting mechanism to assist in refining the solution.

- Terrain Generation: Digital orthophotos are one of the primary end-products in the photogrammetric workflow. Accurate terrain models are an essential ingredient in the generation of digital orthophotos. They are also useful products in their own right, with uses in many vertical market applications (e.g. hydrology modeling, visual simulation applications, line-of-sight studies, etcetera). Terrain models can take the form of TINs (Triangulated Irregular Network) or Grids. Once AT is complete, terrain generation can typically be run as an automatic process in most photogrammetric packages. Automatic terrain generation algorithms typically match “terrain points” on one two or more images (more images increase the reliability of the point). Seed data such as manually extracted vector files, control points, or other data can often be input to help guide the correlation process. There are usually filtering options to remove blunders, also referred to as “spikes” or “wells” in the output terrain model. Filtering can also be used to assist in the removal of surface features such as buildings and trees. This can be of great assistance if the desired output is a “bare-earth” terrain model. It is important to note that terrain may also be acquired via manual compilation (in stereo), LIDAR, IFSAR (Interferometric Synthetic Aperture Radar), or publicly available datasets such as SRTM.

- Terrain Editing: Digital terrain models (DTMs) that have been generated by autocorrelation procedures typically require some “cleanup” activities to model the terrain to the required level of accuracy. Most photogrammetric packages include some capability of editing terrain in stereo. It is important for operators to see the terrain graphics rendered over imagery in stereo so that they can determine if automatically generated terrain posts are indeed “on the ground”. That is, that the DTM is an accurate representation of the terrain, or is at least accurate enough for the specific project at hand. Terrain can usually be rendered using a mesh, contours, points, and breaklines. The operator usually has control over which rendering method is used (it could be a combination) as well as various graphic details such as contour spacing, color, line thickness and more. Terrain editing applications usually provide a number of tools for editing TIN and Grid terrain models. In addition to individual post editing (e.g. add, delete, move for TIN posts, adjust Z for Grid cells), area editing tools can be used for a number of operations. These may include smoothing, surface fitting operations, spike and well removal tools, and so on. Geomorphic tools can be used for editing linear features such as a row of trees or hedges. After a terrain edit has been performed, the system will update the display in the viewer so that the operator can assess the accuracy and validity of the edit. Once the editing process is complete the user may have to convert it into a customer-specified output format (e.g. one TIN format to another, or TIN to Grid). DTMs are increasingly a customer deliverable and product, as mentioned previously they have many uses and are becoming quite widespread in various applications.

- Feature Extraction: Planimetric feature extraction is usually an optional step in the workflow, depending on the project specifications. Automatic 3D feature extraction algorithms are under development, but manual stereo extraction is still the predominant method. Feature extraction tools in digital photogrammetry packages typically allow users to collect, edit and attribute point, line, and polygonal features. Features can be products in themselves, feeding into a 3D GIS or CAD environment. Alternatively building futures may be used again in the photogrammetric processing chain in the production of “true orthos”, which take surface features into account to produce imagery with minimized building lean – which can be particularly beneficial in urban environments.

- Orthophoto Generation and Mosaicing: Digital Orthophotos are usually the primary final product derived from the photogrammetric workflow. There are many different customer specifications for orthos, including accuracy, radiometric quality, GSD, output tile definitions, output projection, output file format and more. A mosaicing process is usually included in the ortho workflow to produce a smooth, seamless, and radiometrically appealing product for the entire project area. Mosaicing may be performed as part of the orthophoto process directly (ortho-mosaicking) or performed as post-process later on. Generally, orthophoto production follows these steps:

- Input image selection: the operator chooses the images to be orthorectificed.

- Terrain source selection: the operator chooses the DTM to be used for orthorectification. This is a critical step, as the accuracy of the orthophoto will be determined by the accuracy of the terrain. A terrain model with gross errors (e.g. a hill not modeled correctly) will result in geometric errors in the resulting orthophoto.

- Define orthophoto options: Operators typical select a number of option for the orthorectification process. These may include output GSD, the image resampling method, projection, output coordinates and more.

Another important aspect is radiometry. While some operators will tackle radiometry early on in the workflow (as previously discussed in the “Image Dodging” step), others will dodge or apply other radiometric algorithms during the orthomosaic production process. The goal is to make the output group of images radiometrically homogeneous. This will result in a visually appealing output mosaic that has consistent radiometric qualities across the group of images comprising the project area.

A project area may be several hundred square kilometers in size, so a single output mosaic file is not usually an option due to the sheer size. End customers cannot usually handle a single large file and would prefer to receive their digital orthomosaic in a series of tiles defined by their specification. Most photogrammetric systems have a method of defining a tiling system that can be ingested by the orthomosaicing application to produce a seamless tiled output product.

In recent years the introduction of high resolution satellite imagery and airborne pushbroom sensors such as the ADS40 have added new variations to the traditional workflow. Both types of sensors product data that are digital from the point of capture, alleviating the need to scan film photography. Commercially available satellite imagery (e.g. CARTOSAT, ALOS, etcetera) has been available at increasingly high levels of resolution (e.g. 80cm resolution for CARTOSAT-2). While this is sufficient for many mapping projects, some engineering level project applications still require the resolution available from airborne sensors.

Pushbroom sensors such as the ADS40 can achieve a ground sample distance in the 5-10cm range. Modern digital airborne sensors are also usually mounted with a GPS/IMU system. GPS (Global Positioning System) technology assists mapping projects by using a series of base stations in the project area and a constellation of satellites providing positional information accessed by the GPS receiver on-board an aircraft. IMU’s (Inertial Measurement Unit) are increasingly used to establish precise orientation angles (pitch, yaw, and roll) for the sensor platform in relation to the ground coordinate system. GPS and IMU information can be extremely beneficial for mapping areas where limited ground control information is available (e.g. rugged terrain). They also assist in the triangulation process by providing highly accurate initial orientation data, which is then further refined by the bundle adjustment procedure. GPS and IMU information can also be used for “direct georeferencing”, which bypasses the time-consuming AT process. However, direct georeferencing is not a universally-accepted methodology within the mapping community. The caveat to direct georeferencing is that project accuracy may suffer – however this may be acceptable for rapid response mapping and other types of projects where lower accuracies are adequate for the end customer.

Thoughts on Current and Future Photogrammetric Workflows

We are beginning to see some shifts in the currents guiding photogrammetric workflows. These shifts are being driving by advances in computing hardware, new sensor technology, and enterprise solutions.

Data storage and dissemination is dynamic area in the industry. While imagery was traditionally backed up on tape systems, the cost of storage has dramatically declined in recent years. As customer demand for high-resolution data increases, it is becoming less practical for users to store data directly on their workstations. Users are increasingly storing imagery on servers, employing different methods for accessing it. Demand appears to be in the increase for tools to manage and archive data. Organizations are also examining the possibility of sharing and publishing data. The data may stored on servers and published via web services or made available for access, subscription, or purchase via a portal.

Sensor hardware is also rapidly changing the photogrammetric workflow. LIDAR has now been widely adopted and accepted, providing extremely high-density and high-accuracy terrain data. In addition to LIDAR, there is a growing trend of integrating LIDAR with digital frame sensors, which enables the simultaneous collection of optical and terrain data, enabling rapid digital orthophoto processing. This is much more cost-effective than flying a project area with multiple sensors for image and terrain data. When coupled with airborne GPS and IMU technology, terrain and georeferenced imagery – the primary ingredients for orthos – can be available shortly after the data is downloaded after a flight. IFSAR mapping systems are also a growing source of terrain data.

Coupled with explosion of imagery is the need to efficiently process it. One method that researchers and software vendors have begun exploring is distributed processing. Under this model a processing job is divided up into portions which are then submitted to remote “processing nodes”, which results in a significant improvement in overall throughput for large projects. Most commercial efforts, such as the ERDAS Ortho Accelerator, have focused on ortho processing. However there also several other photogrammetric tasks that lend themselves to distributed processing solutions (e.g. terrain correlation, point matching, etc.).

With data increasingly stored on network locations and the general adoption of database management systems, enterprise photogrammetric solutions will likely change the face of the classical photogrammetric workflow. With imagery and other geospatial data increasingly stored on servers, the processing framework is likely to change such that the operator interacts with a client application that kicks off photogrammetric and geospatial processing operations. Rather than running a heavy “digital photogrammetry workstation”, or DPW, the operator will be operating a client view into the project. Also, geospatial servers will enable organizations to store and reuse project and other data. For example, automatic correlation processes could automatically identify and utilize seed data stored in online databases, or terrain data stored from previous jobs. The notion of collecting data once and using it many times will be prevalent. Large quantities of data such as airborne and terrestrial LIDAR-derived point clouds will be able to be stored and have operations such as filtering, classification and 3D feature extraction applied to them. With a shift to enterprise solutions, industry adoption of open standards (e.g. Open GIS Consortium) will be critical. Providing open and extensible systems will allows organizations to customize workflows to meet their specific needs, fully enabling their investment in enterprise technology.

Conclusions

This is an exciting time for those of us in the photogrammetry group at ERDAS. Recent trends discussed above have opened up new avenues for changing, modernizing, and empowering what was until recently a relatively static workflow. Our customers drive us to deliver solutions that meet a variety of needs. While there is the constant need to pay attention to existing workflows, it is important to keep an eye on technology trends that will guide future workflow directions.